Introducing LEAP: The Longitudinal Expert AI Panel

Every month, we ask top computer scientists, economists, industry leaders, policy experts and superforecasters for their AI predictions. Here’s what we learned from the first three months of forecasts

AI is already reshaping labor markets, culture, science, and the economy—yet experts debate its value, risks, and how fast it will integrate into everyday life. Leaders of AI companies forecast near futures in which AI cures all diseases, replaces whole classes of jobs, and supercharges GDP growth. Skeptics see small gains at best, with AI’s impact amounting to little more than a modest boost in productivity—if anything at all.

Despite these clashing narratives, there is little work systematically mapping the full spectrum of views among computer scientists, economists, technologists in the private sector, and the public. We fill this gap with LEAP, a monthly survey tracking the probabilistic forecasts of experts, superforecasters, and the public. Expert participants include top-cited AI and ML scientists, prominent economists, key technical staff at frontier AI companies, and influential policy experts from a broad range of NGOs.

LEAP operates on three key principles:

Accountability: LEAP forecasts are detailed and verifiable, encouraging disciplined thinking and allowing us to track whose predictions prove most accurate.

The wisdom of well-chosen crowds: LEAP emphasizes a diversity of perspectives from people at the top of their fields.

Decision relevance: LEAP’s policy-relevant forecasts help decision-makers plan for likely futures.

As well as collecting thousands of forecasts, LEAP captures rationales—1.7 million words of detailed explanations across the first three survey waves. We use this data to identify key sources of disagreement and to analyze why participants express significant uncertainty about the future effects of AI.

Since we launched LEAP in June 2025, we have completed three survey waves focused on: high-level predictions about AI progress; the application of AI to scientific discovery; and widespread adoption and social impact. In this post we’ll introduce the LEAP panel, and summarize our findings from the first three waves.

To find out more about LEAP you can:

Visit the LEAP website

Read our launch white paper

Read a summary report on findings from the first three LEAP waves

The LEAP Panel

LEAP forecasters represent a broad swath of highly respected experts, including:

Top computer scientists. 41 of our 76 computer science experts (54%) are professors, and 30 of these 41 (73%) are from top-20 institutions. The remainder are early-career researchers or non-tenure-track research roles at academic and non-academic research institutions.

AI industry experts. 20 of our 76 industry respondents (26%) work for one of five leading AI companies: OpenAI, Anthropic, Google DeepMind, Meta, and Nvidia.

Top economists. 54 of our 68 economist respondents (79%) are professors, and 30 (44%) are from the top 50 economics institutions.

Policy and think tank groups. We have 119 respondents working in the field of AI policy. The most-represented organizations include (unordered): Brookings, RAND, Epoch AI, Federation of American Scientists, Center for Security and Emerging Technology, AI Now, Carnegie Endowment, Foundation for American Innovation, GovAI, and Institute for AI Policy and Strategy.

TIME 100. Our respondents include 12 honorees from TIME’s 100 Most Influential People in AI in 2023 and 2024.

LEAP forecasters additionally include 60 superforecasters, or highly accurate forecasters based on prior geopolitical forecasting tournaments, and 1,400 members of the general public. The general public group largely consists of especially engaged participants in previous research, reweighted to be nationally representative of the U.S.

LEAP Waves 1–3

The first three waves of LEAP asked panelists to forecast AI’s progress and impact across domains. We asked for predictions on AI’s impact on work, scientific research, energy, medicine, business, and more. We also asked panelists to predict AI’s net impact on humanity by 2040 by comparing it to the impact of past technologies.

For a full list of questions in each wave, see here.

Insights from Waves 1–3

1. Experts expect sizable societal effects from AI by 2040.

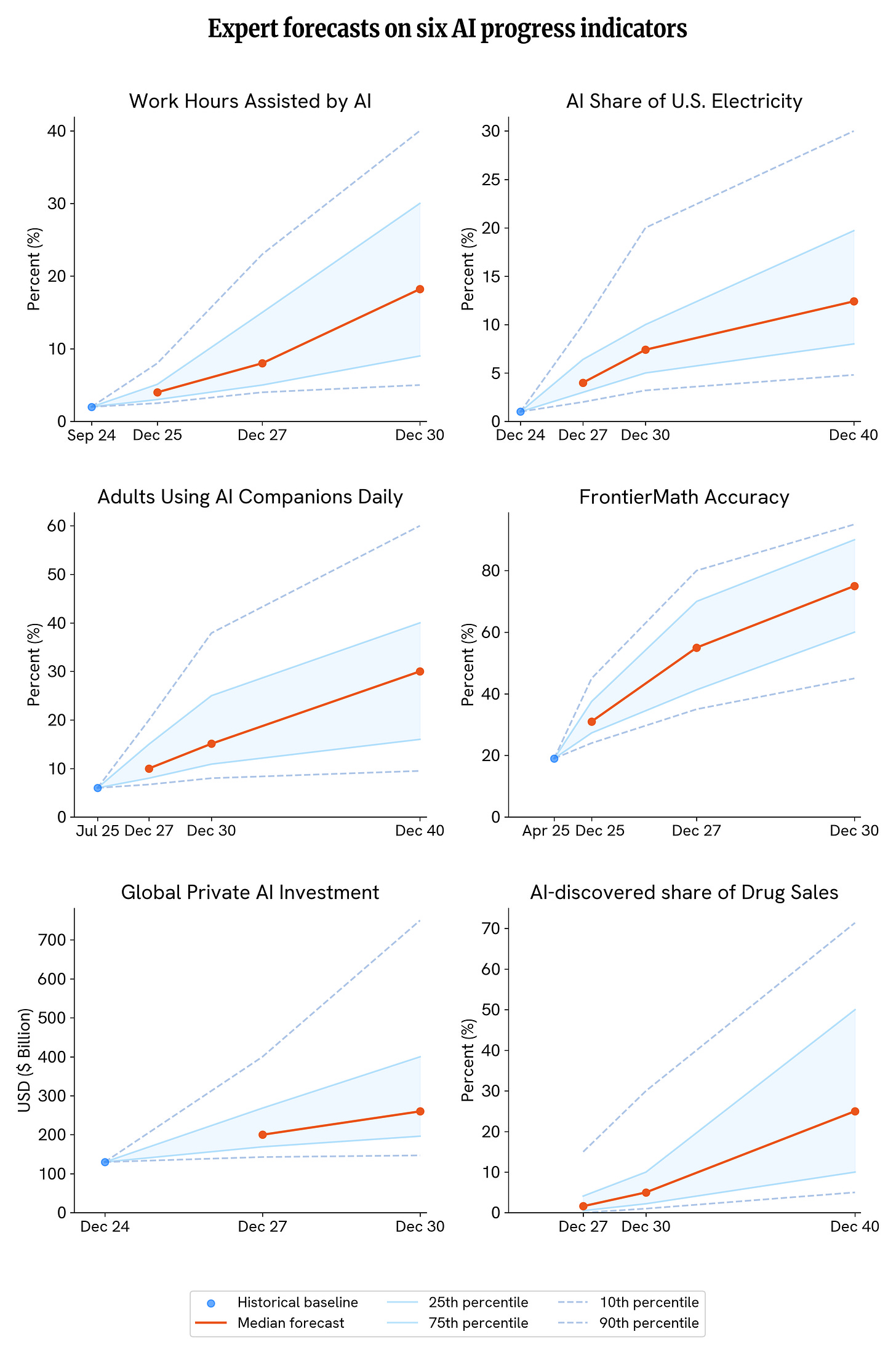

Experts expect substantial increases in the ability of AI systems to solve difficult math problems, the use of AI for companionship and work, electricity usage from AI, and investment in AI:

Work: The median expert forecast is that 18% of work hours in the U.S. in 2030 will be assisted by generative AI, up from approximately 4.1% in November 2024.

Math research: 23% of experts predict that the FrontierMath benchmark will be saturated by the end of 2030. By 2040, experts predict it is more likely than not (60%) that AI will substantially assist in solving a Millennium Prize Problem, a set of problems comprising some of the most difficult unsolved mathematical problems.

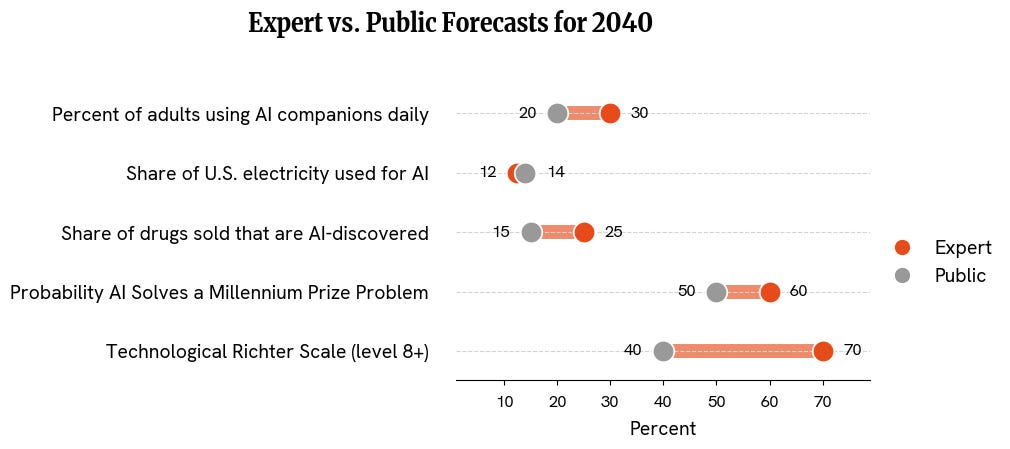

Companionship: The median expert predicts that by 2030, 15% of adults will self-report using AI for companionship, emotional support, social interaction, or simulated relationships at least once daily, up from 6% today. By 2040, that number doubles to 30% of adults.

Electricity usage: The median expert predicts that 7% of U.S. electricity consumption will be used for training and deploying AI systems in 2030, and close to double that (12%) in 2040.

Private AI investment: The median expert predicts that Our World in Data will report $260 billion of global private AI investment by 2030, up from the $130 billion baseline for the series in 2024.1

To assess the broader scope of AI’s impacts, we asked experts to assess “slow” versus “fast” scenarios for AI progress, and how AI will compare to other historically significant developments such as the internet, electricity, and the Industrial Revolution. We found:

Speed of AI progress: By 2030, the average expert thinks that 23% of LEAP panelists will say the world most closely mirrors a “rapid” AI progress scenario, where AI writes Pulitzer Prize-worthy novels, collapses years-long research into days and weeks, outcompetes any human software engineer, and independently develops new cures for cancer. Conversely, the average expert believes that 28% of panelists will indicate that reality is closest to a slow-progress scenario, in which AI is a useful assisting technology but falls short of transformative impact.

Societal impact: By 2040, the median expert predicts that the impact of AI will be comparable to a “technology of the century,” akin to electricity or automobiles. Experts also give a 32% chance that AI will be at least as impactful as a “technology of the millennium,” such as the printing press or the Industrial Revolution and just a 15% chance that AI is equally or less impactful than a “technology of the year” like the VCR.

2. Experts disagree and express substantial uncertainty about the trajectory of AI.

While the median expert predicts substantial AI progress, and a sizable fraction of experts predict fast progress, experts disagree widely. Notably, the top quartile of experts estimate that, in the median scenario, 50% of newly approved drug sales in the U.S. in 2040 will be from AI-discovered drugs, compared to a forecast of just 10% for the bottom quartile of experts.

Further, the top quartile of experts give a forecast of at least 81% that AI will substantially assist with a solution to a Millennium Prize Problem by 2040, compared to just 30% for the bottom quartile of experts.

3. The median expert expects significantly less AI progress than leaders of frontier AI companies.

Leaders of frontier AI companies have made aggressive predictions about AI progress.

Dario Amodei, co-founder and CEO of Anthropic, predicts:

January 2025: “By 2026 or 2027, we will have AI systems that are broadly better than almost all humans at almost all things.”

March 2025: Anthropic also claimed in a response to the US Office of Science and Technology Policy that it anticipates that by 2027 AI systems will exist that equal the intellectual capabilities of “Nobel Prize winners across most disciplines—including biology, computer science, mathematics, and engineering.”

May 2025: Amodei has stated that AI could increase overall unemployment to 10-20% in the next one to five years.

Sam Altman of OpenAI states that:

January 2025: “I think AGI will probably get developed during [Donald Trump’s second presidential] term, and getting that right seems really important.”

Elon Musk, leader of xAI and Tesla, writes:

December 2024: “It is increasingly likely that AI will superset [sic] the intelligence of any single human by the end of 2025 and maybe all humans by 2027/2028. Probability that AI exceeds the intelligence of all humans combined by 2030 is ~100%.”

August 2025: When a user posted “By 2030, all jobs will be replaced by AI and robots,” Musk responded: “Your estimates are about right.”

Demis Hassabis, CEO and co-founder of Google DeepMind predicts:

August 2025: “We’ll have something that we could sort of reasonably call AGI, that exhibits all the cognitive capabilities humans have, maybe in the next five to 10 years, possibly the lower end of that.”

August 2025: “It’s going to be 10 times bigger than the Industrial Revolution, and maybe 10 times faster.”

These industry leader predictions diverge sharply from our expert panel’s median forecasts:

General capabilities: Lab leaders predict human-level or superhuman AI by 2026-2029, while our expert panel indicates longer timelines for superhuman capabilities. By 2030, the average expert thinks that 23% of LEAP panelists will say the world most closely mirrors a (“rapid”) AI progress scenario that matches some of these claims.

White-collar jobs: The median expert predicts 2% growth in white-collar employment by 2030 (compared to a 6.8% trend extrapolation). This contrasts with Elon Musk’s suggestion that all jobs might be replaced by 2030 and Dario Amodei’s prediction of 10-20% overall unemployment within the next five years.

Millennium Prize Problems: The median expert gives a 60% chance that AI will substantially assist in solving a Millennium Prize Problem by 2040. Amodei’s prediction of general “Nobel Prize winner” level capabilities by 2026-2027 could imply a much more aggressive timeline, but the implication of Amodei’s predictions are somewhat unclear.

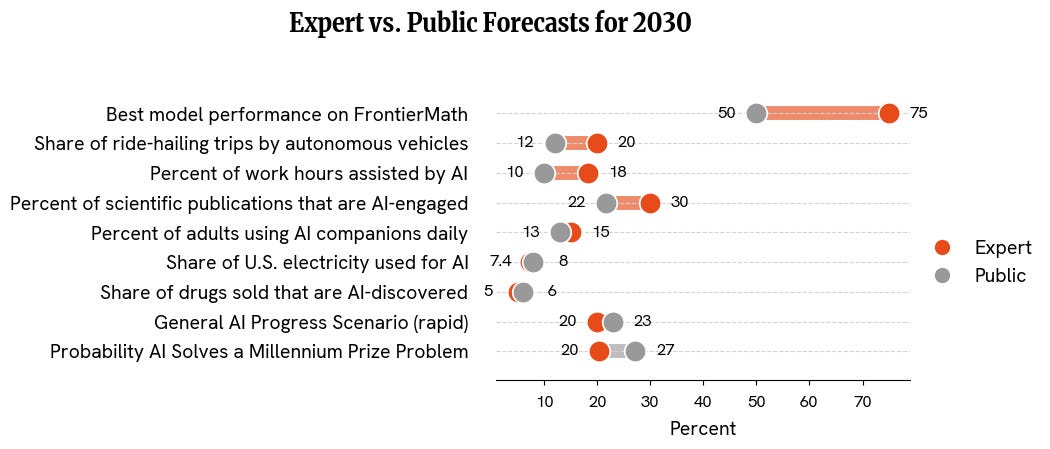

4. Experts predict much faster AI progress than the general public.

The public generally predicts less AI progress by 2030 than experts. Specifically, the general public holds median views about AI progress, capabilities, and diffusion that predict less progress than experts in a large majority (71%) of all cases, are statistically indistinguishable from experts in 9% of cases, and predict more progress in just 21% of forecasts.2

Where experts and the public disagree, the public predicts less progress over three times as often as more progress. Across these forecasts that exhibit a clear valence of AI capabilities, a randomly selected expert is 16% more likely than a randomly selected member of the public to predict faster progress than would be expected by random chance.

Some of the major differences include:

Societal impact: On average, experts give a 63% chance that AI will be at least as impactful as a “technology of the century”—like electricity or automobiles—whereas the public gives this a 43% chance.

Autonomous vehicles: The public predicts half as much autonomous vehicle progress as experts by 2030. The median expert in our sample predicts that usage of autonomous vehicles will grow dramatically—from a baseline of 0.27% of all US rideshare trips in Q4 2024 to 20% by the end of 2030. In comparison, the general public predicts 12%.

Generative AI use: The median expert predicts that 18% of U.S. work hours will be assisted by generative AI in 2030, whereas the general public predicts 10%.

Mathematics: 23% of experts predict that FrontierMath will be saturated by the end of 2030, meaning that AI can autonomously solve a typical math problem that a math PhD student might spend multiple days completing. Only 6% of the public predict the same.

Diffusion into science: Experts predict a roughly 10x increase (from 3% to 30%) in AI-engaged papers across Physics, Materials Science, and Medicine between 2022 and 2030. The general public predicts two thirds as much diffusion into science.

Drug discovery: By 2040, the median expert predicts that 25% of sales from newly approved U.S. drugs will be from AI-discovered drugs, compared to 15% for the public.

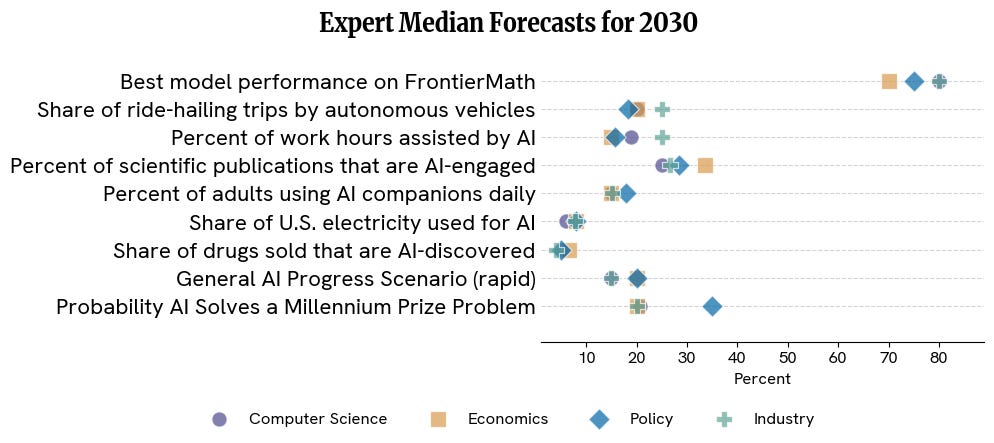

5. There are few differences in prediction between superforecasters and experts, but, where there is disagreement, experts tend to expect more AI progress. We don’t see systematic differences between the beliefs of computer scientists, economists, industry professionals, and policy professionals.

There are no discernible differences between forecasts from different groups of experts. Across all pairwise comparisons of expert categories for each of the questions with a clear AI progress valence, only 32 out of 408 combinations (7.8%) show statistically significant differences (at a 5% threshold), similar to what you would expect from chance. This means that computer scientists, economists, industry professionals, and policy professionals largely predict similar futures as groups, despite there being significant disagreement about AI among experts.

This raises questions about popular narratives that economists tend to be skeptical of AI progress and that employees of AI companies tend to be more optimistic about fast gains in AI capabilities. In other words, while we do see widespread uncertainty among experts about the future of AI systems, capabilities, and diffusion, we fail to find evidence that this disagreement is explained by the domain in which experts work. As LEAP continues, we plan to study what factors most drive expert disagreement.

Superforecasters and expert groups predict similar futures. Superforecasters are statistically indistinguishable from experts in 69% of forecasts with a clear valence, predict less progress than experts in 26% of forecasts, and more progress in 4% of forecasts.

Where superforecasters and experts disagree, superforecasters usually (86% of such cases) predict less progress. For example, the median expert predicts that use of autonomous vehicles will grow dramatically—from 0.27% of all US rideshare trips in 2024 to 20% by the end of 2030, whereas the median superforecaster predicts less than half that, 8%. Superforecasters also predict less societal impact from AI and less AI-driven electricity use.

Drug discovery is the only setting where superforecasters are more optimistic than experts: By 2040, experts, on average, predict that 25% of sales from recently approved U.S. drugs will be from AI-discovered drugs. Superforecasters predict 45%, almost double.

Future LEAP waves

LEAP will run for the next three years, eliciting monthly forecasts from our panelists. We plan to release monthly reports on each new wave of LEAP, highlighting new forecasts and conducting more extensive cross-wave analyses.

The next nine waves will cover:

AI R&D

Security and geopolitics

Robotics

Productivity and welfare

Labor and automation

Incidents and harms

LLMs for AGI

Markets

AI safety and alignment

Do you have a suggestion for a question you’d like to see covered in a future LEAP wave? Please submit a question through our online form.

Several of our questions will resolve by the end of 2025. As questions resolve, we will be able to assess the accuracy of experts’ forecasts. This will allow us to identify particularly accurate individual forecasters, and to assess the relative accuracy of different expert subgroups within our sample. We also plan to re-survey the LEAP panel on many questions in order to track how respondents’ views change over time.

In future work, we plan to analyse “schools of thoughts:” clusters of responses to forecasting questions. We plan to use standard clustering algorithms to search for consistent forecasts across questions, and we will complement this work with analysis of rationales for these various schools of thought.

You can read more about our future plans for LEAP in our launch white paper.

Updated Nov 25, 2025: This post has been updated to clarify LEAP panelists’ forecasts for the AI progress scenarios question.

Our World in Data notes: 1. “The data likely underestimates total global AI investment, as it only captures certain types of private equity transactions, excluding other significant channels and categories of AI-related spending;” 2. “The source does not fully disclose its methodology and what’s included or excluded. This means it may not fully capture important areas of AI investment, such as those from publicly traded companies, corporate internal R&D, government funding, public sector initiatives, data center infrastructure, hardware production, semiconductor manufacturing, and expenses for research and talent.” More details on what is likely excluded can be found at Our World in Data.

These statements refer to the 68 general public forecasts with a clear valence of AI capabilities, meaning that the questions forecasted had an unambiguous directional association with progress.

Hey, great read as always. Truly methodical.

Impressive work! LEAP provides a comprehensive, data-driven view of AI’s potential, and the depth of expert insight and transparency in methodology is truly commendable.

I talk about the latest AI trends and insights. If you’re curious about what top experts and superforecasters predict for AI’s impact on work, science, and society, check out my Substack. I am sure you’ll find it very relevant and relatable.